Non-Generative uses of Local LLMs

Update Oct. 21st: The transcription portion of the post has been updated, what I originally mistook as issues with how the data was formatted was an issue with too many tokens in the transcript I wanted transcribed.

At this point we all know how LLMs can generate text, and I’m guessing that everybody reading this knows some relatively lightweight libre LLMs can be installed and run locally. But, as you can probably guess by reading this, I enjoy writing stuff, so text generation isn’t really something that I have a use for. The knowledge stored in them is helpful for sure; I use local LLMs to find data or troubleshoot something on probably more or less a once-a-week basis (because I’m not connected to the internet, can’t find what I’m looking for in search engines, want to ask about an error code in plain English, etc). They’re also always fun to toy around with at first, but after using them for a while the fun wears off and it just becomes another tool.

So, what else can we do with them? I’ve touched on LLMs in the past, but this one will be more hyper-focused on three tasks I’ve been experimenting with: proofreading, translating, and transcriptions. I touched on two of the three in the other post, but this one will be more detailed with examples instead of just saying, “I tried it, seemed to work.”

What I Used

Everything you’ll see in the examples was accomplished using Dolphin 2.2.1 Mixtral 7b (Q 5.13 GB) in LM Studio 0.2.3 and Dolphin 2.8 Mixtral 7b (Q 3.83 GB) in LM Studio 0.3.2. The difference in versions is only because this has been a blog post built around a bunch of notes and tests I’ve done over a relatively long period of time1 and not because one version worked much better. I also tried these with Google Gemma and Dolphin Phi 2, both quantized around ~2GB. They output the data a lot faster, but they were a little less reliable.

Free Non-Local Versions

As a side note, if you are unable to run an LLM on your machine, you do have a few options to get access to an LLM over the internet without needing an account or payment:

- ChatGPT offers free access to ChatGPT 4o

- DuckDuckGo offers duck chat with free proxied access to ChatGPT 4o and free access to Claud, Lama, and Mixtral hosted by DuckDuckGo.

- Brave (built-in, can be enabled/disabled) and Firefox (though Mozilla’s Orbit extension) offer free access to some AIs, including Mixtral.

- Hugging Face often has various models available to try in a browser

Now, if it only works with an internet connection and you have to rely on somebody else’s (potentially proprietary) server, then it negates some of the benefits here. A local LLM is kinda like a Swiss army knife: good at a lot of things but great at none, so if you’re in a browser it’d probably just be better to navigate to something like Google Translate than it would be to navigate to Duck Chat and paste in text to translate.

There are also usually limits to using free online services. You generally can’t set a system prompt, though you can work around it by pasting the system prompt in the chatbox as instructions and then pasting in what you want to process below the system prompt. Similarly, you probably can’t upload files, but opening them in a browser with AI built-in/added as an extension and prompting it to work with the file could give you similar results.

Proofreading

I tried proofreading with four models: Karen the Editor (creative and strict), Google Gemma, and Dolphin. Karen the Editor is an AI model specifically tweaked for proofreading, strict being a slightly more conservative version that makes fewer changes and creative being more active in its changes. It could be my writing style or the fact that I generally proofread before giving them to a proofreading tool (be it an AI tool or something like Grammerly), but both versions gave nearly identical output for me. It works pretty well, but I got just about identical results with Dolphin using the system prompt below, so given I already have Dolphin installed, I might as well use that.

Google Gemma, specifically the version quantized to 1.59 GB, ran into some issues. While it was lightning fast, no matter what I did for prompts, it kept rewording things in ways I didn’t like. For example, changing “looking for” to “searching for” or turning a slightly long sentence into two stubby sentences. Overall, I’ve had the best luck so far using Dolphin with the following system prompt.

You are an advanced proofreading assistant. Your task is to review the provided text for spelling, punctuation, and grammatical errors. Make corrections as needed, ensuring that the text is clear and coherent. When rewording or rephrasing sentences, aim for a more conservative approach that maintains the original meaning while enhancing readability. Focus on subtle improvements rather than extensive rewrites.

In my original AI post I had said that I sometimes found Dolphin changing more than I would have wanted when proofreading, but with the above system prompt it’s almost too conservative when making changes. So far, I’ve seen it mostly only fixes spelling and punctuation mistakes, sometimes fixing run-on sentences or swapping words for other words (or putting in missing words), but generally they seem to be decent improvements. While not as effective as something like Grammarly, it is by far the best open-source proofreading tool and the best offline proofreading tool I’ve used.

In my original blog post, I mentioned that it starts to try to summarize content instead of proofreading once I paste in something that exceeds a certain length (usually 600-800 words). However, Dolphin 2.8 seems to have fixed that, and feeding it a 2k-word blog post worked fine. It also works well with markdown, although if you start sticking html code into your text it’ll start messing with that. While I will probably continue using Grammarly for the foreseeable future, LLMs are definitely working well.

Finally, to clarify a point of worry some people might have, I’ve proofread text written by me and then fed it through all the different free AI writing checkers - none thought the text was written with AI. I would exercise some caution if you’re a student who’d risk some sort of disciplinary action if your work was flagged as written by AI, but if you’re just cautious about having people think that your writing wasn’t your writing because you proofread it with an AI tool then you should be fine.

Translation

As I mentioned in my last post, LLMs seem to do well with translation. Google Translate could probably be considered a good benchmark; while I’m not sure if it’s the best standard translation tool, I’d guess it’s the most used. I used the online version of Google Translate, the offline version of Google Translate, and Dolphin (I can’t remember if it was 2.2.1 or 2.8). I also tried Gemma, though while it worked it seemed somewhat less accurate.

Side note for those running Android ROMs: I wanted to give offline Google Translate a go and was pleasantly surprised. First, it works even if you don’t have Google Play services installed on the device - a rarity for most Google software. It also works without a Google account, and once you initially download languages for offline use you can yoink the internet permission2 away from the app and it’ll never connect to a remote service again. I think I just unironically praised a Google app.

To run the tests I stole a paragraph from Yaroslav de la Peña Smirnov. I’m hardly fluent in English, much less capable of understanding a second language, but the great thing about Yaroslav is that he publishes his content in English, Spanish, and Russian. Here we know exactly what he intended to say, which can provide us a fairly good way of judging how well the various translations succeeded in getting that across. His posts appear to vary slightly in wording across each language, but we can at least have a good idea of what he intended to say.

For each AI translation I used the following system prompt:

You are a highly accurate translation tool. Your primary function is to translate text from any language into English. When translating, please adhere to the following guidelines:

Contextual Understanding: Consider the context of the text to ensure that idiomatic expressions, cultural references, and nuances are accurately conveyed in English.

Clarity and Precision: Aim for clear and precise translations. Avoid ambiguity and ensure that the meaning of the original text is preserved.

Grammar and Syntax: Maintain proper English grammar and syntax in your translations. The output should read naturally as if it were originally written in English.

Formatting: Retain the original formatting of the text where applicable, including punctuation, bullet points, and numbered lists.

Feedback Loop: If a phrase or term has multiple meanings, provide the most common translation first, and if necessary, offer alternative translations in parentheses.

Language Detection: Automatically detect the language of the input text and confirm the detected language before proceeding with the translation.

User Queries: If the user requests clarification or additional information about the translation, provide explanations or context as needed.

Your goal is to facilitate effective communication by providing accurate and reliable translations from any language to English.

Here’s the unmodified English version of the paragraph:

Hello, and welcome to my weblog. As you may have read in my home page, I will be writing here mainly about what I do, and how I do it. That is, I aim to make a sort of compendium for myself as a self-learning tool; for future reference, when I need to refresh my memory, and of course for anybody else that shares my interests and wishes to learn something new. I am by no means an expert, but I believe that it is easier to learn by sharing your knowledge.

And here’s the same paragraph from the Spanish version of his website:

Hola, y bienvenidos a mi diario. Como a lo mejor habrás leído en mi página principal, estaré escribiendo en este diario más que nada acerca de lo que hago, y como hago lo que hago (vaya pleonasmo que me he hechado). En otras palabras, mi propósito es hacer un tipo de cuaderno/diario digital con apuntes para mi mismo, acerca de qué es lo que hago y describiendo el proceso. Me sirve más que nada como herramienta autodidáctica, y para futura referencia, cuando necesite refrescar mi memoria, y por último, pero no menos importante, por si sirve de ayuda a otras personas que se interesan por lo mismo que yo. No soy un experto que todo lo sabe, pero bien se dice que se aprende mejor cuando compartes tus conocimientos.

And here’s the same paragraph from the Russian version of his website:

Доброго времени суток, добро пожаловать в мой веб-журнал. В нем я буду рассказывать о том чем я занимаюсь, и буду писать о том как все это делаю. Цель данного журнала - сделать для себя такой вид электронных конспектов где для себя описываю процесс по которому я разрабатываю софт и сайты, настраиваю линукс, и тому подобные темы, чтобы таким образом я мог обратиться к своими же записям когда надо будет что-то вспомнить. Я также считаю что это может пригодиться и другим людям, и затем я записываю все жто в открытом доступе. Я не эксперт, но я считаю что делясь знаниями и опытом, я сам лучше учусь.

Google Translate (online) Spanish to English:

Hello, and welcome to my journal. As you may have read on my main page, I’ll be writing in this journal mostly about what I do, and how I do what I do (what a pleonasm I’ve made). In other words, my goal is to make a kind of digital notebook/diary with notes for myself, about what I do and describing the process. I use it mostly as a self-teaching tool, and for future reference when I need to refresh my memory, and last but not least, in case it helps other people who are interested in the same things as me. I’m not an expert who knows everything, but it is well said that you learn better when you share your knowledge.

Google Translate (offline) Spanish to English:

Hello, and welcome to my diary. As you may have read on my home page, I will be writing in this newspaper more than anything about what I do, and how I do what I do (what a pleonasm that I have given myself). In other words, my purpose is to make a type of notebook/digital diary with notes for myself, about what I do and describing the process. It serves me more than anything as a self-taught tool, and for future reference, when I need to refresh my memory, and lastly, but not least, in case it helps other people who are interested in the same thing as me. I am not an expert who knows everything, but it is well said that you learn better when you share your knowledge.

Dolphin LLM Spanish to English:

Hi and welcome to my blog! As you may have read on my main page, I will be writing in this blog mainly about what I do and how I do it (what a pleonasm I made for myself). In other words, my purpose is to create some kind of digital notebook/diary with notes for myself, about what I do and describing the process. It serves me above all as a self-learning tool, and for future reference when I need to refresh my memory, and last but not least, but no less important, in case it helps others who are interested in the same things as I am. I’m not an expert who knows everything, but as they say, you learn best by sharing your knowledge.

Google Translate (online) Russian to English:

Good day, welcome to my web journal. In it I will talk about what I do, and I will write about how I do it all. The purpose of this journal is to make for myself such a kind of electronic notes where I describe for myself the process by which I develop software and sites, configure Linux, and similar topics, so that in this way I can refer to my own notes when I need to remember something. I also believe that this can be useful to other people, and then I write it all down in the public domain. I am not an expert, but I believe that by sharing knowledge and experience, I myself learn better.

Google Translate (offline) Russian to English:

Good day, welcome to my web log. In it, I will talk about what I do, and I will write about how I do all this. The purpose of this magazine is to make for myself such a type of electronic notes where for myself I describe the process by which I develop software and sites, set up Linux, and similar topics, so that in this way I can turn to my own records when I need to remember something. I also believe that this may be useful to other people, and then I write everything in the public domain. I am not an expert, but I think that by sharing knowledge and experience, I myself study better.

Dolphin LLM Russian to English:

Good afternoon, welcome to my blog! Here I will talk about what I do and write about how I do it. The goal of this blog is to create a kind of electronic notebook where I describe the process by which I develop software and websites, configure Linux, and other related topics, so that I can refer back to them when needed. I also believe that this may be useful for others, and then I will post everything publicly. I am not an expert, but I believe that sharing knowledge and experience helps me learn better.

Dolphin seems nearly on par with the online version of Google Translate. Dolphin might have an edge on that paragraph, although with a sample size of one I’m not fully committed to concluding that Dolphin beats the online version of Google Translate. However, it looks like it beats the offline version of Google Translate, and with Dolphin being both fully offline and libre, I’d say it seems the better choice of all three options if you have a computer powerful enough to run it.

Translating Images & Audio

However, not everything is plain text, so how well can we use local AI to translate audio content or images? The LLM itself can only accept text based input, so I’m going to take advantage of two other AIs to get us the text.

First is Whisper AI by OpenAI, an open source audio transcription tool that runs entirely offline. It offers simple transcriptions in various languages and is perfect for this task. For this experiment I used the small algorithm. Secondly, Tesseract by Google is an OCR (optical character recognition) tool that’s also open source and runs entirely offline. One minor thing of note: I had the English model installed already but never installed the Spanish one for the test.

So, let’s start out with audio. I started with the first minute and twelve seconds of Maria Graciani’s Tedx Talk which I downloaded with YT-DLP.

Google offers automatically translated subtitles, which come out to the following:

Thank you very much Pablo When someone asks me who I am I love to answer I am illusion personified understanding illusion as incessant loyalty to oneself synergy of passion because when you believe in yourself you make others believe and so on you manage to create your world and This is what I did through the language of motivation and of course to make sure I did it perfectly well in the first place I consulted with the experts the children

However, we’re not too interested in Google’s translation; I’m more focused on what we can do with offline AI models. The first thing I did was run it through WhisperAI, getting the following output:

[00:00.000 --> 00:10.800\] Muchísimas gracias Pablo. Cuando alguien me pregunta que quién soy, a mí me encanta

[00:10.800 --> 00:18.680] responder. Soy la ilusión personificada. Entendiendo ilusión como incesante lealtad

[00:18.680 --> 00:27.360] a uno mismo. Sinergia de la pasión. Ilusión. Porque cuando tú crees en ti mismo, haces

[00:27.360 --> 00:34.080] que los demás crean y así logras crear tu mundo. Y esto fue lo que yo hice a través

[00:34.080 --> 00:40.640] del lenguaje de la motivación. Y como no, para asegurarme de hacerlo perfectamente bien,

[00:40.640 --> 00:44.520] en primer lugar, consulté con los expertos, los niños.

Next, it was as simple as pasting in the output from Whisper AI to Dolphin. It returned the following:

[00:00.000 --> 00:10.800] Thank you very much, Pablo. When someone asks me who I am, to me it's wonderful

[00:10.800 --> 00:18.680] to respond. I am personified illusion. Understanding illusion as incessant loyalty

[00:18.680 --> 00:27.360] to oneself. Synergy of passion. Illusion. Because when you believe in yourself, you make others

[00:27.360 --> 00:34.080] believe and thus create your own world. And this is what I did through the language of motivation. And of course, to make sure I did it perfectly well, firstly

[00:34.080 --> 00:40.640] I consulted with experts, the children.

So yeah, it works pretty well here. Using two offline and open source AI models we can take an audio or video file and translate it to any language.

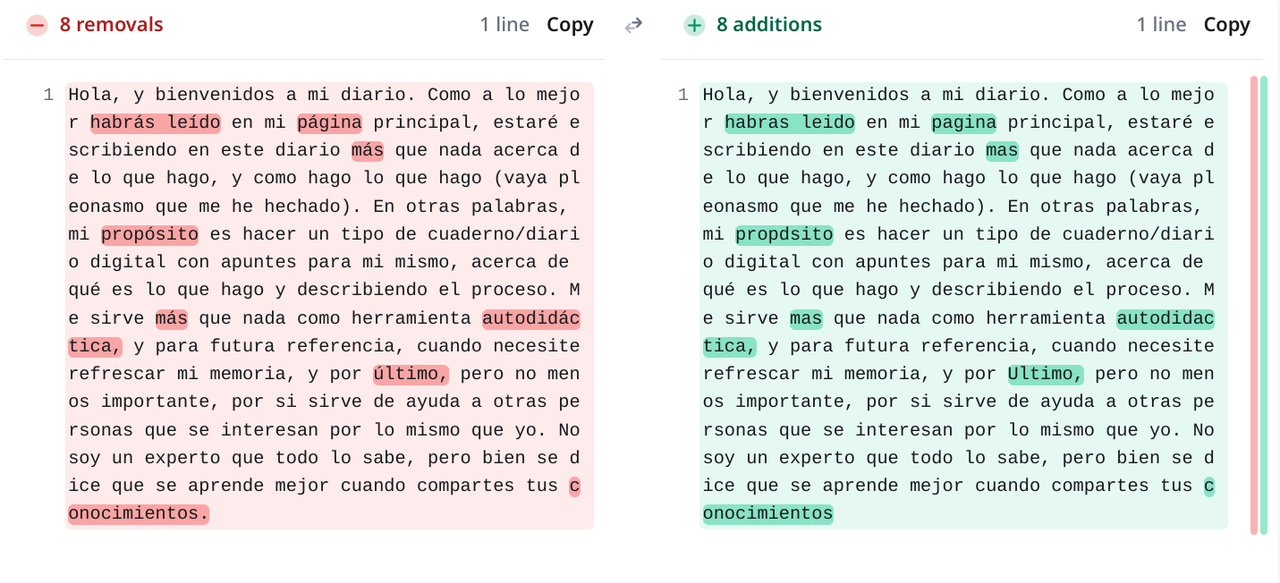

But what about images? Pretty much the same deal, the LLM isn’t going to be able to understand an image so I fed a screenshot of the same text from Yaroslav into Tesseract.

As you’ll notice in the difference checker, it’s missing some Spanish characters, likely because I used the English version of the model. Still, the LLM translated the text from Tesseract to the exact same output as it gave me when I pasted in the text from his website directly.

Transcribing an Audio File to Essay/Article

After using the speech-to-text, I wondered how well I could turn an audio transcript into something that has an essay/article layout. Spoiler: it worked flawlessly. So, why would you want to do this (aside from me doing it because I wanted to experiment with things)? I could see a few reasons:

First, you could prefer to speak instead of type, either because of preference or a disability that makes typing hard. Talk into a microphone for a while then hand that off to some software and your computer will create a decently formatted essay style document.

Or, perhaps you’re primarily making audio content, but want to offer another way to consume said content. Video essayists, podcasters, etc. Large swaths of YouTube videos and just about any podcast is all audio content only - just in the case of YouTube there’s some background footage or gameplay to keep people watching the video visually engaged. You could provide people with content on a blog or newsletter with a few quick clicks.

Similarly, you could be consuming content from others in video/audio form and want to read it instead. Whether it be disability related, a preference, or a unique use case (say, an e-ink device with incredible battery life to consume content without access to the internet or electricity for long periods). Regardless, you’re only a couple clicks away from having your computer convert audio/video content into an essay style text document to read.

Lastly, archival is another great potential use for this. Say I wanted to archive a backlog of podcasts, video essays, speeches, or commentary videos. Sure, the audio/video versions would be ideal, but if you could turn a couple terabytes of audio or video into a couple hundred megabytes of text then that’d be a pretty darn significant upgrade in how much content you could preserve. Talk about an effective compression algorithm3.

Transcribing an Audio File

I started with this video of a speech by Winston Churchill. I initially decided to use it because I wanted to demonstrate an entire video to show accuracy, and angering copyright holders was not something I wanted to do. However, it worked out pretty well since it demonstrated success even with audio that’s not super crisp and professionally recorded with modern equipment.

The first thing I did was download the video using YT-DLP in M4A format. Afterwards, running it through whisper AI using the small English model gave the following results:

Dropdown: Output from Whisper AI

[00:00.000 –> 00:11.500] When a week ago today, Mr. Speaker, I asked the House to fix this afternoon at the occasion [00:11.500 –> 00:20.540] for a statement, I feared it would be my hard lot to announce the greatest military disaster [00:20.540 –> 00:24.180] in our long history. [00:24.180 –> 00:31.840] I thought, and some good judge agreed with me, that perhaps 20 or 30,000 men might be [00:31.840 –> 00:32.840] reimbursed. [00:32.840 –> 00:39.720] But it suddenly seemed that the whole of the French First Army and the whole of the [00:39.720 –> 00:48.540] British Expeditionary Force, north of the Amia Abbeyal Yap, would be broken up in the [00:48.540 –> 00:54.640] open field, or else would have to capitulate for lack of food and ammunition. [00:54.640 –> 01:01.680] These were the hard and heavy tidings, which I called upon the House and the nations to [01:01.680 –> 01:05.920] prepare themselves a week ago. [01:05.920 –> 01:13.920] The whole root and core and brain of the British Army, on which and around which we were to [01:13.920 –> 01:21.240] build and are to build, the great British armies in the later years of the war seemed [01:21.240 –> 01:29.480] about to perish upon the field, or be led into an ignominious and starving captivity. [01:29.480 –> 01:38.720] The enemy attacked us on all sides with great strength and fierceness, and their main power, [01:38.720 –> 01:46.040] the power of their far more numerous air force, was thrown into the battle, or else concentrated [01:46.040 –> 01:49.400] upon Dunkirk and the beaches. [01:49.400 –> 01:56.200] Pressing in upon the narrow exit, both from the east and from the west, the enemy began [01:56.200 –> 02:02.960] to fire with cannons upon the beaches by which alone the shipping could approach our depart. [02:02.960 –> 02:07.040] They sowed magnetic mines in the channels and seas. [02:07.040 –> 02:12.760] They sent repeated waves of hostile aircraft, sometimes more than a hundred strong in one [02:12.760 –> 02:20.400] formation, to craft their bombs upon the single pier that remained, and upon the sand dunes [02:20.400 –> 02:26.040] on which the troops had their only shelter. [02:26.040 –> 02:33.040] Now you both, one of which were sunk, and their motor launches took the toll of the [02:33.040 –> 02:36.200] vast traffic which now began. [02:36.200 –> 02:39.960] For four or five days an intense struggle rained. [02:39.960 –> 02:45.520] All their armored divisions, or what was left of them, together with great masses of infantry [02:45.520 –> 02:53.000] and artillery, hurled themselves in vain upon the ever-narrowing, ever-contracting appendix [02:53.040 –> 02:57.760] within which the British and French armies fought. [02:57.760 –> 03:04.040] Meanwhile the Royal Navy, with the willing help of Countess Merchant Seaman, strained [03:04.040 –> 03:09.440] every nerve to embark the British and Allied troops. [03:09.440 –> 03:17.080] Two hundred and twenty light warships and six hundred and fifty other vessels were engaged. [03:17.080 –> 03:23.280] They had to operate upon the difficult coast, and often in adverse weather, under an almost [03:23.280 –> 03:29.800] ceaseless hail of bombs, and an increasing concentration of artillery fire. [03:29.800 –> 03:36.160] Nor were the seas, as I have said, themselves free from mines and torpedoes. [03:36.160 –> 03:43.120] It was in conditions such as these that our men carried on with little or no rest for [03:43.120 –> 03:50.480] days and nights on end, making trip after trip across the dangerous waters, bringing [03:50.480 –> 03:55.600] with them always men who may address you. [03:55.600 –> 04:02.160] The numbers they have brought back are the measure of their devotion and their courage. [04:02.160 –> 04:06.920] The hospital ships which brought off many thousands of British and French wounded, being [04:06.920 –> 04:14.000] so plainly marked, were a special target for Nazi bombs, but the men and women on board [04:14.000 –> 04:17.560] them never faltered in their duty. [04:17.560 –> 04:24.880] Meanwhile the Royal Air Force, which had already been intervening in the battle, so far as [04:24.880 –> 04:32.400] its range would allow, from our home bases, now used part of its main metropolitan fighter [04:32.400 –> 04:40.680] strength, and struck at the German bombers, and at the fighters, which in large numbers [04:40.680 –> 04:43.520] protected them. [04:43.520 –> 04:46.160] This struggle was protracted in fear. [04:46.160 –> 04:50.240] Suddenly, the scene is cleared. [04:50.240 –> 04:57.760] The crash and sundry adds to the moment, but only for the moment, died away. [04:57.760 –> 05:05.800] A miracle of deliverance achieved by valor, by perseverance, by perfect discipline, by [05:05.800 –> 05:14.280] faultless service, by resource, by skill, by unconquerable fidelity, it manifests to us [05:14.280 –> 05:16.520] all. [05:16.520 –> 05:23.000] The enemy was hurled back by the retreating British troops. [05:23.000 –> 05:29.040] They were so roughly handled that he did not carry their departure seriously. [05:29.040 –> 05:39.040] Sir, we must be very careful not to assign to this deliverance the attributes of a victory. [05:39.040 –> 05:45.800] Wars are not won by evacuations, but there was a victory inside this deliverance which [05:45.800 –> 05:47.920] would be noted. [05:47.920 –> 05:51.120] These were gained by the Air Force. [05:51.120 –> 05:56.440] Many of our soldiers coming back have not seen the Air Force at work. [05:56.440 –> 06:01.440] They saw only the bombers, which escaped its protective attack. [06:01.440 –> 06:04.200] They underrated its achievements. [06:04.200 –> 06:11.160] I’ve heard much talk of this, and that is why I go out of my way to say this. [06:11.160 –> 06:13.240] I will tell you about it. [06:13.240 –> 06:19.400] This was a great trial of strength between the British and German Air Force. [06:19.400 –> 06:27.400] Can you conceive a greater objective for the Germans in the air than to make evacuation [06:27.400 –> 06:34.040] from these beaches impossible, and to sink all these ships which were displayed almost [06:34.040 –> 06:36.960] to the extent of thousands? [06:36.960 –> 06:42.320] Could there have been an objective of greater military importance and significance for the [06:42.320 –> 06:45.080] old purpose of the wars of this? [06:45.080 –> 06:48.680] They tried hard, and they were beaten back. [06:48.680 –> 06:51.960] They were frustrated in their task. [06:51.960 –> 07:01.360] We got the army away, and they have paid fourfold for any losses which they have inflicted. [07:01.360 –> 07:07.960] Sir, when we consider how much greater would be our advantage in defending the air above [07:07.960 –> 07:13.680] this island again for no overseas attack, I must say that I find in these facts a shrubb [07:13.680 –> 07:19.520] basis upon which practical and reassuring thoughts may rest. [07:19.520 –> 07:23.760] I will pay my tribute to these young Germans. [07:23.760 –> 07:33.080] The great French army was very largely for the time being charged back and disturbed [07:33.080 –> 07:38.080] by the enraged for a few thousands of armored vehicles. [07:38.080 –> 07:47.800] May it not also be that the court of civilisation itself will be defended by the skill and devotion [07:47.800 –> 07:51.000] of a few thousand Germans. [07:51.000 –> 07:58.560] There never had been, I suppose, in all the world, in all the history of war, such an opportunity [07:58.560 –> 07:59.560] for use. [07:59.560 –> 08:07.080] The knights of the round table, the crusaders, all fall back into the past. [08:07.080 –> 08:12.240] Not only distant, but protege. [08:12.240 –> 08:17.360] These young men going forth every morn to guard their native land and all that we stand [08:17.360 –> 08:25.400] for, holding in their hands the instruments of colossal and shattered power of whom it [08:25.400 –> 08:32.520] may be said that every morn brought forth a noble chance, and every chance brought forth [08:32.520 –> 08:42.720] a noble knight, deserve our gratitude, and do all the brave men in so many ways and on [08:42.720 –> 08:51.880] so many occasions are ready and continue ready to give life and all their native land. [08:51.880 –> 08:59.400] For this, our thankfulness, at the escape of our army, and so many men who loved ones [08:59.400 –> 09:06.280] have passed through an agonising week, but not blind us to the fact that what happened [09:06.280 –> 09:12.960] in France and Belgium is a colossal military disaster. [09:12.960 –> 09:19.120] The French army has been weakened, the Belgian army has been lost, a large part of those [09:19.240 –> 09:26.120] fortified lines upon which so much faith has been reposed, it’s gone, many valuable mining [09:26.120 –> 09:31.920] districts and factories have passed into the enemy’s possession, a whole of the channel [09:31.920 –> 09:39.560] ports, 90 hands, there’s all the tragic consequences that follow from that, and we must expect [09:39.560 –> 09:46.240] another blow to be struck, almost immediately, at us or at France. [09:46.360 –> 09:52.920] We are told, sir, that’s how Hitler had a plan for invading the British Isles. [09:52.920 –> 09:56.440] This is often being thought of before. [09:56.440 –> 10:03.680] When Napoleon laid Boulogne for a year with his fat bottom boats and his grand army, he [10:03.680 –> 10:10.720] was told by someone, there are bitter weeds in England. [10:10.720 –> 10:16.000] There are certainly a great many more of them since the British expeditionary force returned. [10:16.000 –> 10:23.480] Sir, I have my self-full confidence that if all do their duty, if nothing is neglected, [10:23.480 –> 10:29.560] and if the best arrangements are made, as they are being made, we should prove ourselves [10:29.560 –> 10:36.360] once more able to defend our island home, to ride out to the storm of war, and to our [10:36.480 –> 10:45.480] lives of menace of tyranny, if necessary, for years, if necessary, alone. [10:46.160 –> 10:49.560] At any rate, that is what we are going to try to do. [10:49.560 –> 10:53.960] That is the result of his majesty and government, every man of them. [10:53.960 –> 10:56.520] That is the will of parliament and the nation. [10:56.520 –> 11:02.160] The British Empire and the French Republic, linked together in their cause and in their [11:02.200 –> 11:08.560] need, will defend to the death their native soil, aiding each other like good comrades [11:08.560 –> 11:10.840] to the utmost of their strength. [11:10.840 –> 11:17.200] We shall go on to the end, we shall fight in France, we shall fight on the seas and [11:17.200 –> 11:24.200] oceans, we shall fight with growing confidence and growing strength in the air, we shall [11:25.120 –> 11:31.640] defend our island, whatever the cost may be, we shall fight on the beaches, we shall fight [11:31.720 –> 11:38.080] on the landing grounds, we shall fight in the fields and in the streets, we shall fight [11:38.080 –> 11:45.080] in the hills, we shall never surrender, and if, which I do not for a moment believe, this [11:46.760 –> 11:53.760] island or large part of it was subjugated and starving, then our empire beyond the seas [11:54.760 –> 12:01.760] armed and guarded by the British fleet would carry on the struggle until, in God’s good [12:02.280 –> 12:09.280] time, the new world with all its power might step forth to the rescue and the liberation [12:10.200 –> 12:11.000] of the old.The output worked really well. It’s not 100% perfect; it made a few mistakes, such as “U boats” becoming “You both” and “Thunder” becoming “Sundry,” though I only found three mistakes, and I highlighted them in bold to make them easy to spot. I would guess that a modern monologue recorded on a cell phone mic would be a little more accurate and wouldn’t have made those mistakes, though even with the older audio archive under the small model it is pretty good.

Anyway, I fed the outputted text document to Dolphin and gave it the following system prompt:

Attached is a copy of a transcribed speech. Reformat the transcribed speech in essay form, remove any timestamps, fix punctuation, and make sure it's broken up into readable paragraphs. Do not change any words, however, this has to be a perfect transcription.

Dropdown: Output from Whisper AI run through Dolphin 2.8 Mixtral

When a week ago today, Mr. Speaker, I asked the House to fix this afternoon at the occasion for a statement, I feared it would be my hard lot to announce the greatest military disaster in our long history. I thought, and some good judge agreed with me, that perhaps 20 or 30,000 men might be reimbursed. But it suddenly seemed that the whole of the French First Army and the whole of the British Expeditionary Force, north of the Amia Abbeyal Yap, would be broken up in the open field, or else would have to capitulate for lack of food and ammunition. These were the hard and heavy tidings, which I called upon the House and the nations to prepare themselves a week ago.

The whole root and core and brain of the British Army, on which and around which we were to build and are to build, the great British armies in the later years of the war seemed about to perish upon the field, or be led into an ignominious and starving captivity. The enemy attacked us on all sides with great strength and fierceness, and their main power, the power of their far more numerous air force, was thrown into the battle, or else concentrated upon Dunkirk and the beaches.

Pressing in upon the narrow exit, both from the east and from the west, the enemy began to fire with cannons upon the beaches by which alone the shipping could approach our depart. They sowed magnetic mines in the channels and seas. They sent repeated waves of hostile aircraft, sometimes more than a hundred strong in one formation, to craft their bombs upon the single pier that remained, and upon the sand dunes on which the troops had their only shelter. Now you both, one of which were sunk, and their motor launches took the toll of the vast traffic which now began.

For four or five days an intense struggle rained. All their armored divisions, or what was left of them, together with great masses of infantry and artillery, hurled themselves in vain upon the ever-narrowing, ever-contracting appendix within which the British and French armies fought. Meanwhile the Royal Navy, with the willing help of Countess Merchant Seaman, strained every nerve to embark the British and Allied troops. Two hundred and twenty light warships and six hundred and fifty other vessels were engaged.

They had to operate upon the difficult coast, and often in adverse weather, under an almost ceaseless hail of bombs, and an increasing concentration of artillery fire. Nor were the seas, as I have said, themselves free from mines and torpedoes. It was in conditions such as these that our men carried on with little or no rest for days and nights on end, making trip after trip across the dangerous waters, bringing with them always men who may address you. The numbers they have brought back are the measure of their devotion and their courage.

The hospital ships which brought off many thousands of British and French wounded, being so plainly marked, were a special target for Nazi bombs, but the men and women on board them never faltered in their duty. Meanwhile the Royal Air Force, which had already been intervening in the battle, so far as its range would allow, from our home bases, now used part of its main metropolitan fighter strength, and struck at the German bombers, and at the fighters, which in large numbers protected them.

This struggle was protracted in fear. Suddenly, the scene is cleared. The crash and sundry adds to the moment, but only for the moment, died away. A miracle of deliverance achieved by valor, by perseverance, by perfect discipline, by faultless service, by resource, by skill, by unconquerable fidelity, it manifests to us all. The enemy was hurled back by the retreating British troops.

They were so roughly handled that he did not carry their departure seriously. Sir, we must be very careful not to assign to this deliverance the attributes of a victory. Wars are not won by evacuations, but there was a victory inside this deliverance which would be noted. These were gained by the Air Force.

Many of our soldiers coming back have not seen the Air Force at work. They saw only the bombers, which escaped its protective attack. They underrated its achievements. I’ve heard much talk of this, and that is why I go out of my way to say this. I will tell you about it. This was a great trial of strength between the British and German Air Force.

Can you conceive a greater objective for the Germans in the air than to make evacuation from these beaches impossible, and to sink all these ships which were displayed almost to the extent of thousands? Could there have been an objective of greater military importance and significance for the old purpose of the wars of this? They tried hard, and they were beaten back.

They were frustrated in their task. We got the army away, and they have paid fourfold for any losses which they have inflicted. Sir, when we consider how much greater would be our advantage in defending the air above this island again for no overseas attack, I must say that I find in these facts a shrubb basis upon which practical and reassuring thoughts may rest.

I will pay my tribute to these young Germans. The great French army was very largely for the time being charged back and disturbed by the enraged for a few thousands of armored vehicles. May it not also be that the court of civilisation itself will be defended by the skill and devotion of a few thousand Germans?

There never had been, I suppose, in all the world, in all the history of war, such an opportunity for use. The knights of the round table, the crusaders, all fall back into the past. Not only distant, but protege. These young men going forth every morn to guard their native land and all that we stand for, holding in their hands the instruments of colossal and shattered power of whom it may be said that every morn brought forth a noble chance, and every chance brought forth a noble knight, deserve our gratitude, and do all the brave men in so many ways and on so many occasions are ready and continue ready to give life and all their native land.

For this, our thankfulness, at the escape of our army, and so many men who loved ones have passed through an agonising week, but not blind us to the fact that what happened in France and Belgium is a colossal military disaster. The French army has been weakened, the Belgian army has been lost, a large part of those fortified lines upon which so much faith has been reposed, it’s gone, many valuable mining districts and factories have passed into the enemy’s possession, a whole of the channel ports, 90 hands, there’s all the tragic consequences that follow from that, and we must expect an another blow to be struck, almost immediately, at us or at France.

We are told, sir, that’s how Hitler had a plan for invading the British Isles. This is often being thought of before. When Napoleon laid Boulogne for a year with his fat bottom boats and his grand army, he was told by someone, there are bitter weeds in England. There are certainly a great many more of them since the British expeditionary force returned. Sir, I have my self-full confidence that if all do their duty, if nothing is neglected, and if the best arrangements are made, as they are being made, we should prove ourselves once more able to defend our island home, to ride out to the storm of war, and to our lives of menace of tyranny, if necessary, for years, if necessary, alone. At any rate, that is what we are going to try to do. That is the result of his majesty and government, every man of them.

That is the will of parliament and the nation. The British Empire and the French Republic, linked together in their cause and in their need, will defend to the death their native soil, aiding each other like good comrades to the utmost of their strength. We shall go on to the end, we shall fight in France, we shall fight on the seas and oceans, we shall fight with growing confidence and growing strength in the air, we shall defend our island, whatever the cost may be, we shall fight on the beaches, we shall fight on the landing grounds, we shall fight in the fields and in the streets, we shall fight in the hills, we shall never surrender, and if, which I do not for a moment believe, this island or large part of it was subjugated and starving, then our empire beyond the seas armed and guarded by the British fleet would carry on the struggle until, in God’s good time, the new world with all its power might step forth to the rescue and the liberation of the old.

As you can see, there are no new mistakes. The transcription does contain three (that I noticed), which I bolded again, but they were made by Whisper and not the LLM. In this particular case Whisper AI added punctuation to its output. Although on some other video essays I tried this with Whisper AI did not produce punctuation, Dolphin was still able to add punctuation and paragraphs with no errors regardless.

In the case of YouTube itself, using Whisper AI isn’t necessary. YouTube auto-generates subtitles, which can be downloaded using something like YT-DLP or one of the many YouTube subtitle downloader websites. I was able to download subtitles from a few videos and feed them to the LLM with the same system prompt and was provided the same essay/blogpost style output without errors each time. However, this is me experimenting with transcribing audio, and while YouTube specifically could skip the transcription step, the goal is more so experimenting with taking an audio file of any kind and, without internet or anything proprietary, getting a transcribed document in an easy-to-read format.

Dropdown: Data Hoarding/Archiving Bonus Thoughts

Heck, for something like YouTube, you could probably skip running them through an LLM and just grab subtitles for archiving. Say you wanted to archive every video by a YouTube commentary channel using consumer grade hardware; just point YT-DLP to the channel and tell it to grab the subtitles for all the videos on the channel. Optionally compress the subtitle files further using something like xz (use -9e for the most compression at the cost of the most time to run the job) and stash away everything.

Then, if you want to read through a specific archive, you can feed it into the LLM. Running many archived subtitles through an LLM at once would take a lot of processing power and time, but if you archive the subtitles and run them through the LLM when/if you want to consume them it’s suddenly a lot easier.

Dropdown: Attached Document & Token Troubleshooting

Every LLM is going to have a maximum token size, tokens being a measurement of how much data is in the text that you provide to it in a prompt. It’s almost never an issue with general prompts, and often is not an issue on uploaded files either. For example, while an entire ebook on, say, the history of the Roman Empire might exceed it’s size, it can still do things with parts relevant to the prompt. Say you ask how monetary policy contributed to it’s collapse, it can then find the parts of the ebook file that speak about that, add those parts to it’s memory, and then use those in it’s answer while also providing the location of the info in the document.

However, if you’re doing something like asking it to transcribe an entire document it has to load the entire document, and if it’s too long it might just give some generic response or act like you never shared the document at all. All data in attached documents count towards tokens; for example, while a plain text transcription from Whisper might be short enough, a YouTube auto subtitle file might be 80% bigger due to metadata, causing the latter to fail but not the former - even if they were both from the same audio/video file.

If you’re doing something that needs the entire file loaded in one prompt you’ll want to keep an eye out for a “full injection” message. If it says “citations found” or “no citations found” it’s not loading the entire document and will fail if you’re expecting it to work on the whole document at once. Your best bet is to try again after breaking the document into multiple parts or use an LLM that can process more tokens.

LM studio in developer mode does let you increase the max tokens it’ll feed the LLM, although I’ve found it often fails to load if you crank it up too high. Decreasing how much of the LLM is accelerated with my graphics card does seem to resolve the issues of it failing to load, although that also slows it down a lot. In the future maybe either the LLMs or the software they’re running in will be able to break the file up into multiple pieces on it’s own and then do them bit by bit, but in most cases your best bet is to break the file up into a couple pieces and do them one at a time.

P.S. If you have a subtitle file in something like vtt or srt format just change the extension to .txt and it should work.

There are some limitations to this. There’s no headings or formatting, so reading the plain text output as provided by the LLM looks a bit bland. Further, doing this with video content loses all important imagery, so you’re limited to doing something like this to only video essay/monologue type videos. Finally, Whisper AI will not differentiate audio from different speakers. If you’re trying this on a video/audio file with multiple speakers it will transcribe the text, but there will be some missing context to the text that it outputs4.

Summarization

Generic page/document summarization has had few updates in my experience since my last post. Summaries often miss the point of what I’m giving it unless it’s something really simple like a short news article, and it occasionally gives wrong information. Summarizing long content in Dolphin will usually result in a vague and useless summary (kinda pointless if it can only summarize short content), and the browser plugins often give worse results. Tell Brave to summarize a large page and gives you a heads up it’s too long and summarizing the first x% of the content, and Orbit will refuse to summarize a large page.

While complex stuff (say a comparison of microblogging protocols) is usually really bad, relatively short content with a simple/linear message (e.g., a brief news article that says XYZ happened) does summarize well. Unfortunately, however, it’s probably less effort to skim over that sort of stuff for a summary rather than feed it to an AI. That said, there is some summarization adjacent stuff that I’ve had luck with.

Parsing data/documents

While generic summarization often fails spectacularly, Dolphin’s ability to parse a large document and answer questions about stuff in it is much more helpful. For example, I was able to give it an entire ebook and ask about its content. Not only did it provide an accurate answer to my questions, but it could also cite the location in the book that it was pulling from, so I could easily go to that spot and read it instead of relying on the LLM’s interpretation of the words.

Similarly, when I tested it previously, asking an LLM integrated into a browser about a webpage I was on was also accurate. When I did my original LLM post, I went to one of my blog posts and asked it a bunch of specific questions, all of which provided both detailed and correct responses.

Podcasts

In between starting this post and now, Google released Notebook LM, a tool that can (among other things) generate a podcast based on the document(s) or webpage(s). I gave it a go a few times and posted one of the audio outputs on Nostr, but I thought maybe I could rig something like Dolphin or Gemma to generate a script locally (which could be read by an open source neural voice tool like piper). Long story short, I had no luck. I could generate podcast scripts, but just like generic summaries, I wound up with ones that were too short and vague with occasionally incorrect information.

Some notes about Notebook LM

When I tried Notebook LM I generated a handful of ‘podcasts’ based on my blog posts and took some notes thinking I might make a blog post about them later. I didn’t have enough to say to justify a blog post, so I figured I’d stuff my random notes here. If you’re interested in a full sized blog post about Notebook LM Simon Wilison made a blog post you might find interesting.

Some random notes that I took about things that stood out:

- I got several ad breaks without ads, some variation of “We’ll be right back” followed by a short pause and then a “Now back to the topic.”

- Like any LLM powered tool, it understands language but not its meaning. For example, I tried my EDC post, and when it got to the part about my watch freezing up every day at midnight it went on this long tangent about how when your own things have their quirks, it’s a cool personalization that makes you unique. Um, no. My watch broke :(

- It occasionally got things wrong, like turning me mentioning my car broke down into me being stranded on a road trip.

- When it talked about gluing cuts together it made me sound all cool and hardcore instead of just a clumsy moron.

- It sometimes mis-summarizes complex things. I used my microblog protocol comparison post and it basically said Activity Pub was first, then Nostr was second and fixed some Activity Pub stuff, and then AT (Bluesky) was third and fixed some Nostr stuff. That’s not what the blog post said.

- When I fed it my post about making a hat it endorsed Glacier Ware and the host said they’ve purchased from the company and was pleased. In my hat post I did mention potentially purchasing a pelt from Glacier Ware in the future, but specifically specified that I had not bought anything from them at the time5.

- They said that you can buy a car for $900. I have no idea what digital drugs NotebookLM was on.

- It said ‘sus,’ and I found that funny.

I don’t mean to be too negative; my notes were a little nitpicky because I probably listened to a couple hours of AI podcasts about my blog posts and only made a note when something was wrong or funny. It is worth toying around with, though there are more productive means of consuming information.

Update 10/16/2024: It only felt fitting to through this blog post into notebook LM

Footnotes

-

I don’t mean that I’ve done any extensive testing, just that I’ve done it piece by piece over the course of a long time and eventually decided to assemble my notes into a blog post. ↩︎

-

Graphene OS specifically added the ability to revoke the network permission, unfortunately it’s not part of standard Android. ↩︎

-

I think I just invented the most effective compression algorithm ever. Download a backlog of videos/podcasts and transcribe them to text using an LLM. Then, train an AI model on the creator’s voice and archive that AI model. At the initial cost of storing a few GBs for the AI model, you can compress any primarily audio content with an efficiency of like 99.99%. Yeah, no, I know it’d kinda lose a lot of value in the work if we’re listening to a robot that sounds vaguely like the creator read a text document. Still something to think on though, and archiving video/audio in text form might not be a bad idea; platforms don’t last forever and archiving text/extracted subtitles is way more feasible. We consume content compressed with lossy compression all the time (compression that impacts the data quality). A good video essay would still be worth consuming as text or TTS audio, and from a data preservation standpoint, having a ton of data ‘compressed’ in this manner is still infinitely better than not having it at all. ↩︎

-

There are tools like Pyannote that can differentiat who is speaking. I ran that and gave the LLM a Whisper AI transcript plus the metadata from Pyannote. However, I had no luck getting the LLM to process something from multiple speakers and provide an easily read document. That said, I didn’t try for more than a few minutes, so there could be a way to get it working. ↩︎

-

After making the blog post I purchased a pelt from them and made a hat with it. The hat turned out pretty good, and Glacier Ware seems like a great place to buy that sort of stuff. Still, my blog post clarified that I had not purchased from them at the time, and Notebook LM endorsed them regardless. ↩︎